Robotic Rhythm - AI in Music 🦾

How much did the BVMI and CNM estimate fake streams generated in 2021? $194 million, $257 million, or $525 million? 💰

Welcome to this week's SongsBrews newsletter! You can find previous issues in your User area.

A huge 👋 to all our new subscribers; we're happy to have you on board!

This issue:

- ‘Robots’ stealing revenue.

- Ircam amplify’s huge AI breakthrough.

- YouTube and The Big Three in AI talks.

- Using AI to create playlists.

- AI copyright lawsuit with more than meets the eye.

Scripts and Bots Siphoning Revenue

Spotify has recently made a few controversial remarks, including how ‘easy’ it is to create content and that tracks under 1000 plays will be demonetized. However, they are pleading with artists this week to discourage fans from ‘inorganic’ music streaming.

While initially, many small artists weren’t happy about the announcement (after already having a hard time), clarification soon came. Spotify has released more information and said they mean bots and scripts.

Bots and scripts were responsible for an estimated 3% of Spotify streams and up to 7% on Deezer (reported by BVMI and the CNM). This ongoing problem has siphoned royalties away from legitimate artists who promote their music correctly (using tools like Music24 and social media, for example).

DSPs are investing in improved software that tracks suspicious activity and can now find bots and scripts and remove fake streams, tracks, or artists. The margin for error is just enough to see smaller artists have tracks removed, though. Fine-tuning is required, but it is a good step forward in the streaming cleanup.

While bots and scripts aren’t strictly AI, new AI tools are being used to combat them.

🤖Battle of the robots?🤖

📢 Remember, you can check your dashboard anytime for trends in music streaming. Freshly ground data served up piping hot.

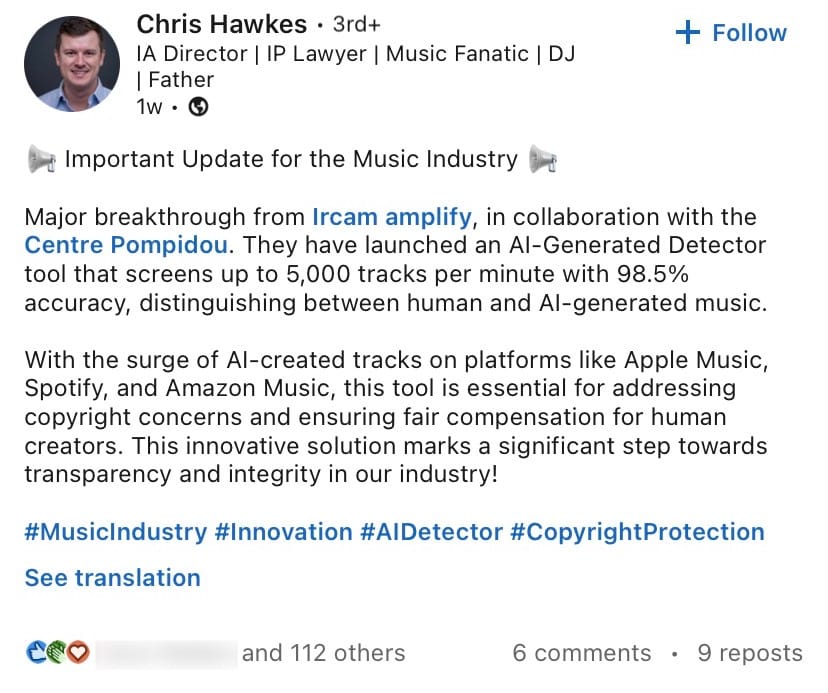

Hot AI LinkedIn Discussions

There have been some discussions circulating on LinkedIn about the Ircam amplify breakthrough. Working with Centre Pompidou, Ircam Amplify has launched an AI-generated detector that screens around 5,000 tracks per minute. It can distinguish between AI-generated and human-made music with a reported 98.5% accuracy.

Why do we need this?

In recent years, Spotify, Amazon, and Apple Music have been flooded with AI-gen music. Spotify users even reported at the end of 2023 that Release Radar playlists (one of their most popular playlists) almost exclusively consist of AI music.

The issue is that these AI songs get paid just like real artists and often use samples and music without copyright approval. The crackdown will be positive for real artists as it will protect the integrity of the music on streaming platforms. And ensure that the payments that small artists already have to fight for aren't diminished with fake music.

YouTube, UMG, Sony Music and WMG.

AI is a hot topic in the music industry (as you can tell), and while it can help artists achieve incredible things, it can also mean that people with no musical skills can use AI music to make money. The issue that big record labels and publishers have (and are suing over) is that these tracks are built using the music of legitimate artists, often breaking copyright.

The solution? Ask for a license to train your AI generators legally. YouTube is in talks with The Big Three, Sony, Warner, and Universal to get more artists to agree to be used in their generative AI software.

YouTube previously released Dream Track, with ten consenting artists taking part: An early look at the possibilities as we experiment with AI and Music. But this is not an expansion of that; these talks are to get a blanket license with a one-off payment to the media groups.

It could be an exciting development, with a legal way to create AI music (but it remains to be seen if the created tracks could ever be allowed on streaming services. Since DSPs are developing AI to prevent AI music from making revenue, YouTube could possibly be the only place to host such music).

Playlists using AI

There is much discussion about using AI in music creation and how it hurts the music industry. You can use AI to make playlists without making a negative impact. In a matter of minutes, you can create a playlist that fits your mood or the task that you are doing.

While excellent playlist creation is a fine art, having an algorithm do the heavy lifting for you can be an incredible start.

Two major music streaming platforms have invested in AI playlist creation: Spotify and Amazon Music. With a Spotify Premium subscription in the UK or Australia (on Android), you can get access to the AI Playlist feature.

Amazon Music made Maesto (its AI playlist generator) available to all subscription tiers in the US—a fun way to use AI without harming any artists.

Have you tried any AI tools to build playlists?

Suno and Udio

Suno and Udio are two startups currently in (very) hot legal waters. Their technology can produce an 'original' song using AI and minimal text input. Both are currently in a landmark legal tangle with Song Music, Warner Records, and Univeral Music Group.

And if you’re thinking, ‘What could two small start-ups have done to warrant such a huge response?’ The answer is that they didn’t want to pay to license the music they trained their AI on.

Earlier in the year, a group representing the major record labels decided to pursue a single, aggressive strategy and sue for copyright infringement. However, this is where it gets a little more interesting.

Both Suno and Udio are helping users create 'unique' music, and the record labels aren’t claiming that the music itself is the issue. Instead, they have gone for a different approach. They are suing over copyright infringement because the AI was trained using copyrighted work, which requires permission.

So, what do the record companies want? An admission of breaking copyright rules, a stop to any activities that infringe that copyright, and damages for past harms.

The compensation demanded is $150,000 (USD) a work.

Lawsuits like this are why YouTube has taken a different approach and is instead in talks. The increased use of AI in music has created multiple blurred lines and muddy waters.

As it unfolds, the case will set new boundaries and guidelines for future AI work. This is one to watch.

Did you get it right? It is estimated that $525 million of streaming royalties are from fraudulent streams: Fake Music Streams: Why the Industry Can't Solve Its Fraud Problem.

Quick tips:

Use FreeYourMusic for smooth music transfers (up to 600 for free).

Playlist curator? Artist? This is for you: Music24. Grow streams (without bots).

Have you tried out the AI Playlist creator yet?

Thanks for being part of the SongsBrew community,

Until next time!

Exclusive access to the music metrics that matter sponsored by Music API & FreeYourMusic.